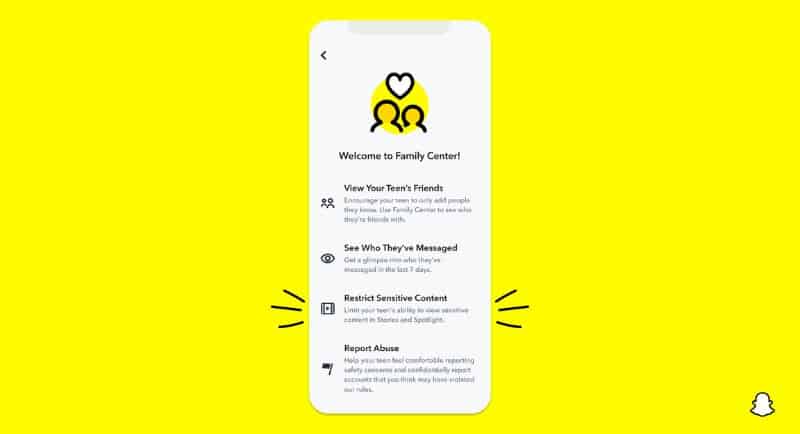

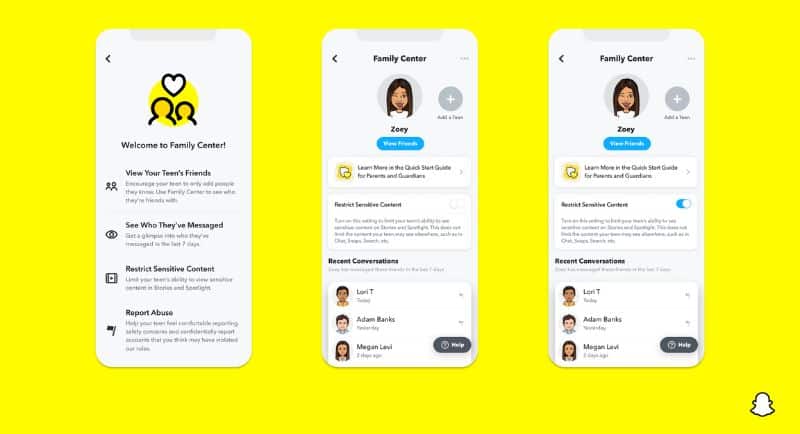

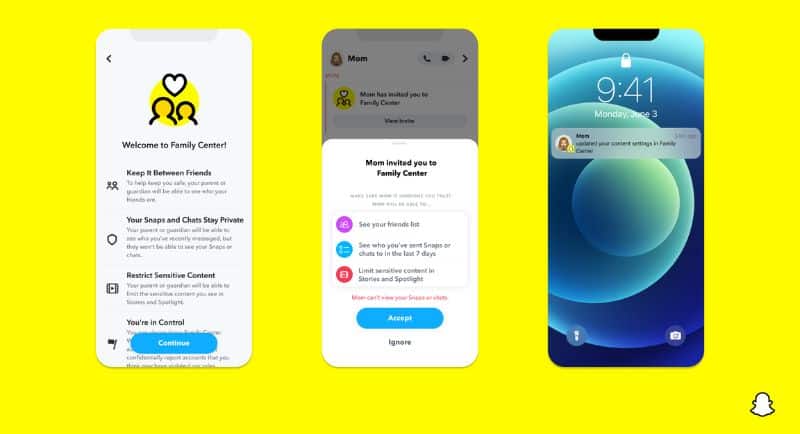

Snapchat has revealed new updates to its Family Centre to help support parents in ensuring the safeguarding of children online.

With the immense amount of content online, Content Controls on the platform have been updated to enable parents to filter the content visible to their children.

According to eSafety research, 94% of Australian parents feel that the safety of their children online is important, but many may not know where to start.

Because of this, Snapchat has made updates to its Family Centre Content Controls to give parents and guardians more choices and support when it comes to filtering the content their children can view.

The update allows parents to turn on Content Controls and opt to filter out content on Snapchat Stories or in Spotlight that may have been identified as sensitive or suggestive.

As a primarily a visual messaging app, Snapchat helps people communicate with their family and friends, but there are other parts of the app where content may reach a larger audience.

This includes the Stories tab – where Snapchatters can view recommended content from professional media publishers and popular creators, and Spotlight – which Snapchatters can watch the best snaps created and submitted by our community.

Snapchat noted that they do not do not distribute unmoderated content to a large audience and that content on the platform must adhere to their Community Guidelines and Terms of Service. For content to be eligible for algorithmic recommendation it must meet the additional, higher standards that are enforced with proactive moderation using technology and human review.

The platform also provides in-app tools for users to report content that they find objectionable. Moderators respond to a user report quickly, and they use the feedback to improve the content experience for all Snapchatters.

On Stories and Spotlight tabs, Snapchatters may see content that is recommended by our algorithms — including content that may come from accounts that they do not follow. While they use algorithms to feature content based on individual interests, they are applied to a limited and vetted pool of content, which is a different approach from other platforms.

Snapchatters represent a diverse range of ages, cultures and beliefs, and the platform aims to provide a safe, healthy, valuable experience for all users, including those as young as 13.

Snapchat understands that many users may see content without actively choosing to do so, which is why the guidelines are in place to protect Snapchatters from experiences that may be unsuitable or unwanted.

Within the pool of eligible content, we aims to personalise recommendations, especially for sensitive content. For example: content about acne treatments may seem gross to some Snapchatters, while others may find it useful or fascinating.

Content featuring people in swimwear might seem sexually suggestive, depending on the context or the viewer. While some sensitive content is eligible for recommendation, the platform may decline to recommend it to certain Snapchatters based on their age, location, preferences, or other criteria.

These recommendation eligibility guidelines apply equally to all content, whether it comes from an individual or an organisation of any kind.

Content is not eligible for recommendation to anyone if it is harmful, shocking, exaggerated, deceptive, intended to disgust, or in poor taste.

Accounts that repeatedly or egregiously violate the eligibility criteria below may be temporarily or permanently disqualified from recommendations.

Media partners also agree to additional guidelines and terms, which require that content is accurate, fact-checked, and age- or location-gated when appropriate.