By Hannah Melanson, creative director, Innocean Australia

It’s 5pm on a Monday. I’m sharing a mutually blank stare with another one of our creative directors, Dave, as we so often do. But this time, it’s different.

As usual, my gaze is a combination of too much coffee, too little sleep and arguably too many years in this industry. Dave’s? Well, it’s not really his gaze at all.

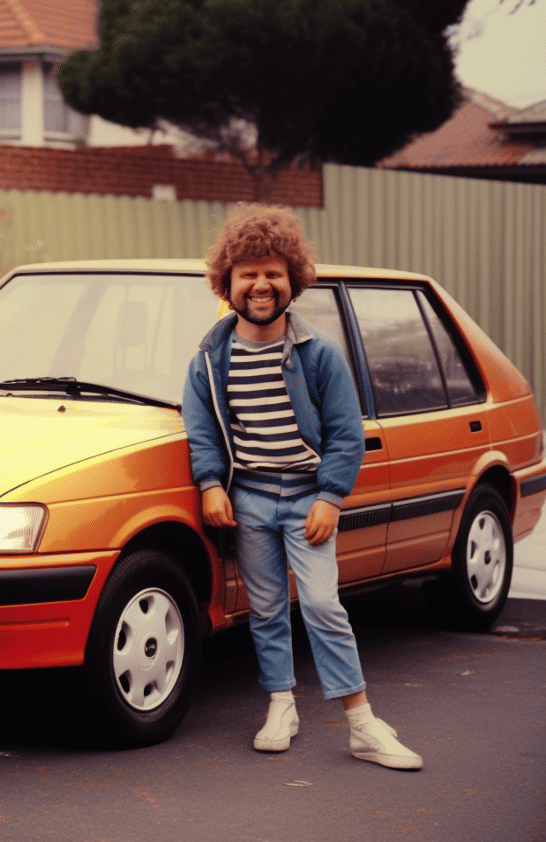

I peer into the misshapen eyes of his Midjourney replica, oddly reminiscent of a Stranger Things cast member from a fever dream, proudly perching against an equally questionable rendition of a 90’s Excel. I think to myself: “Can we really pull this off?”

I pause. I examine all four fingers of his left hand, contemplate his disconcerting grin with equal parts curiosity and concern, and reflect on the pervasive controversy surrounding creativity and AI. I think again: “…Should we?”

It would be a risk. We’d pitched our client Hyundai a stop motion timelapse of distinctly Aussie memories from the last three decades, rapidly match cut to animate their legacy from then to now. And we only had three weeks to make it.

Trouble was, those photos didn’t actually exist. And even if they did, we wouldn’t have the time, permission or means to gather enough of them to achieve the desired effect.

And so, our first foray into creating an (almost) entirely AI-generated ad began. Here’s how we did it – why we did it – and what we learned on our (Mid)journey.

Choosing the right job for the tool

AI image generation tools like Midjourney and DALL-E are a technological marvel. They’re also bizarrely unpredictable to work with, controversial and, particularly in ad land, the biggest of buzzwords.

We weren’t willing to jump on the bandwagon unless it was for the right brief. And this was it. Rather than building an idea around using AI, we wanted to use AI to save an idea at the point of no return. Thankfully, we had a client with an appetite for innovation – and no shortage of trust.

“It was refreshing to leverage AI for its utility, instead of working it into an idea as the empty hero to demonstrate to our clients ‘…and here’s the AI route,’” Adam Hosfal, managing partner for Hyundai, said. “We had less control than anticipated – and it was a leap of faith being at the mercy of the tech – but that in itself made it exhilarating.”

The Celebrating Two Million Cars ad was never about innovation for innovation’s sake. It was about solving a real problem and channelling genuine nostalgia for Australia, commemorating a 37-year love affair with Hyundai and the open road. We may have shifted our methods and means, but we never shifted our focus.

Finding Will…ing partners in crime.

Internally, we had the confidence, bravery and blind faith needed to forge ahead. But we needed an experienced Midjourney whisperer to make up for our lack of TV-ready assets. Enter Will Alexander, founder of VFX and post-production studio, Heckler.

“If we didn’t all back each other, we wouldn’t have gotten there,” recounts Will. “It’s not that I wasn’t shitting myself a little bit, but if there was ever a brief, a client and an agency to try this with, it was this one.”

Will and his brilliant team of vision-weavers didn’t back down when we came to them with this new and, frankly, uncharted direction. Within an hour of our brief, a string of alarmingly accurate HyundAIs filled our inboxes. Heckler stepped up with an urgency, optimism and enthusiasm that inspired the rest of us to dive into the unexplored, head first. (Dave’s head first, to be exact. As pictured in the nightmarish photo above).

Moving fast, crafting slow

One of the many misnomers of AI is that it’s a shortcut. And oftentimes, it is. But when accuracy is the aim, there’s no substitute for human craft.

An early analogy from Heckler’s executive producer, Steven Marolho, stuck with us throughout the process: “Think of Midjourney like baking a cake. If you like the base, we can add icing and sprinkles through things like retouching and grade. But if you don’t like the base, we have to start all over again.”

And start again we did, generating over 4,000 images to yield the 250+ AI frames required for the film, which then had to be meticulously retouched and refined. Any one of those 4,000 frames could have sunk the project – and some of them nearly did – but the immense amount of human consideration and craft was the life raft that kept the vision afloat.

Building on the much-loved baking analogy that guided our collective creative process, Adrien Girault, Heckler’s head of design, reflected on the experience from prompts through to post: “We were refining the recipe up until the very last day. Even if we did the same brief in a month, or six months, it would be completely different. What would stay the same; however, is the human need.”

Braving the new world, one brief at a time

Ironically, it didn’t take long for our AI experiment to become more of a lesson in humanity than a masterclass in image manipulation. Rather than treating this new creative tool in our toolbox like any other piece of new tech, Midjourney became a new pseudo-member of our team. A ‘Mid-Junior’, if you will. And while it technically had the skills to do the job it was given, it didn’t yet have the experience or craft to deliver a polished output.

Like any fresh talent, it required patience, direction, instinct and a keen eye for detail to unlock its full potential, making our roles as creatives and producers all the more essential to the success of the project.

And that’s exactly how we intend to continue leveraging AI into the future: Putting the right ‘talent’ on the right job with the right direction one brief at a time to deliver the best work (humanly) possible.

AI may be beckoning a brave new world but, as creatives, we’re still the architects of concept and custodians of craft – and we still have a necessary (six finger-less) hand in orchestrating the outputs of AI.

At least for now.

Special thanks to the brilliant minds at Heckler for VFX and Heckler Sound for music and SFX for helping us bring this campaign to life.

–

Top Image: Hannah Melanson