The company responsible for creating the AI voice of ARN ‘presenter’, Thy, has responded to public criticism about the on-air use of artificial intelligence.

Released quietly on the Anzac Day public holiday and posted to the ElevenLabs platform, the AI voice technology company used to create Thy, wrote:

“Thy was created with ElevenLabs’ AI voice technology. She’s based on an ARN Media employee who works in finance and gave consent for her voice to be used. Within an hour of uploading the voice samples, the synthetic version was live.”

Officially going public

This is the first time there has been public confirmation of how the voice was created, following backlash over Thy’s debut and ARN’s subsequent lack of transparency.

The launch triggered an industry-wide discussion on diversity, consent, and AI’s place in creative audio environments after listeners noticed Thy’s appearance and accent resembled that of a young Asian woman, despite no formal explanation from ARN at the time.

Key questions remain unanswered

ARN are yet to respond to two critical issues repeatedly raised by Mediaweek: whether the employee whose voice was used was paid for her participation, and how the voice and image selection fits into ARN’s diversity practices.

Positioning Thy as a creative experiment

The company says the creation of Thy is part of a broader strategy to explore “new forms of creative expression” through AI, emphasising that it is “not replacing people,” but rather experimenting with more personal, automated listening experiences.

According to ARN, CADA currently reaches around 160,000 digital listeners.

“Thy offers a fully-automated listening experience, powered by our Text to Speech and voice cloning tools,” the statement reads. “She is part of how we’re making radio more personal, without losing what makes it compelling.”

Echoes of earlier deleted comments

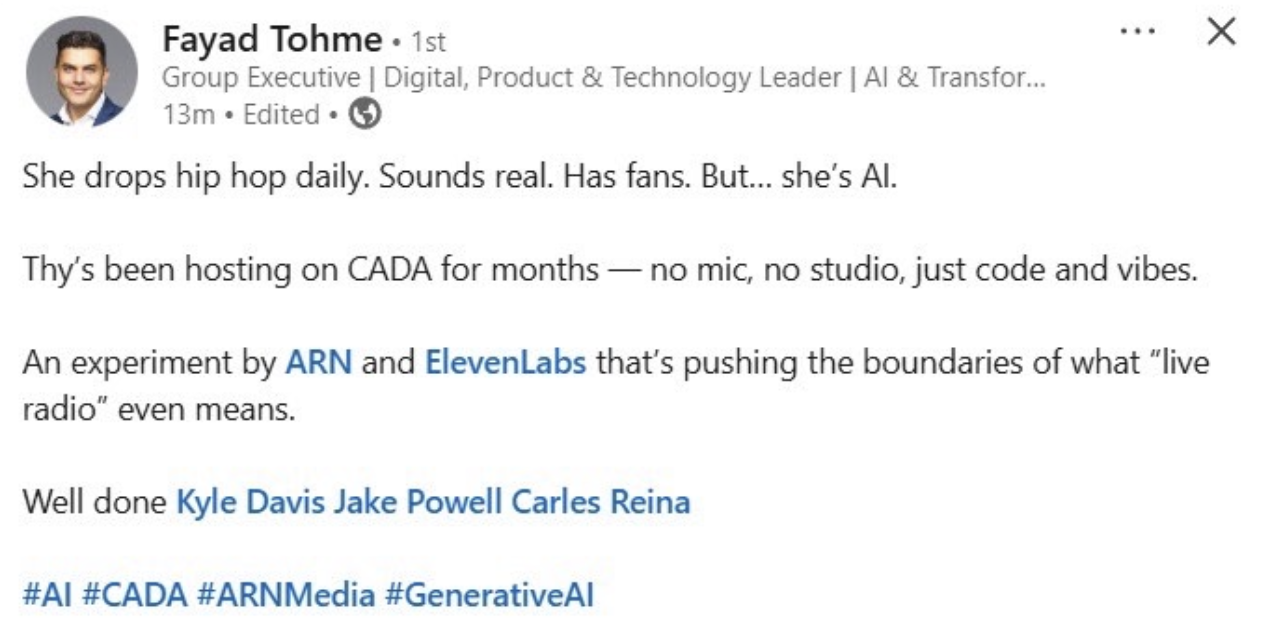

The introduction to the release mirrors wording from an earlier public comment posted to LinkedIn by digital, product and technology leader Fayed Tohme which was later deleted.

At the time, Tohme had also described the project as an experiment in personalisation and AI-driven radio engagement.

The statement goes on to position its AI initiative alongside other unnamed audio players.

“Audacy uses AI to generate ads and podcasts. Futuri, SuperHiFi and Radio.Cloud are building full-stack automation tools for stations and the number of use cases keeps growing”.

Transparency still under scrutiny

Despite the company’s efforts to shift the conversation toward creative innovation and automation, key questions remain unanswered, particularly around compensation and representation.

For many in the media and advertising community, transparency around how synthetic voices are chosen, paid for, and visually represented is an essential part of ethical and inclusive AI deployment.